The Mir Space Station was a true Soviet engineering wonder, an achievement comparable with the US landing on the Moon. Yet in its later years, Mir survived some horrific & hair-raising accidents…

Download mp3

Subscribe: iTunes | Android App | RSS link | Facebook | Twitter

The Awful and Wonderful History of the Mir Space Station

(Full Transcript)

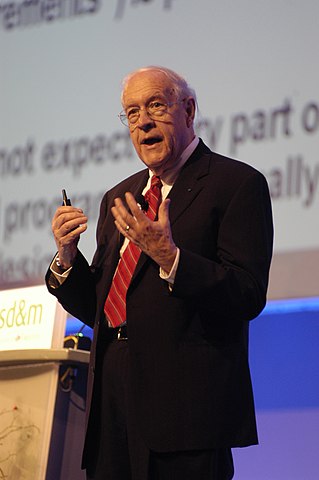

Written By: Ran Levi

Since the 1950s, the Soviet Union and the United States have competed in what was called the Space Race: each of the superpowers tried to prove its technological, moral, and economic superiority through its achievements in space exploration.

The successful landing of the Apollo 11 on the moon on July 20, 1969, was an important achievement for the United States in the space race. The Soviet Union was looking for a challenge that would restore some of its lost prestige.

The challenge the Russians chose was the establishment of a space station. A space station is a kind of ‘semi-permanent structure’, which allows for a longer space stay than a spacecraft like Apollo or even the Space Shuttle. If a spaceship is like a ship that goes on short voyages, then the space station is like a barge that one can live on for months or even years.

The First Space Station

The first space station launched by the Soviet Union was Salyut 1 in April 1971: Salyut means fireworks. Salyut 1 was launched unmanned, and three days later the Soyuz 10 spacecraft took off with three cosmonauts who were supposed to be the first occupants of the space station.

After a 24-hour journey, Soyuz 10 approached Salyut 1 and prepared to dock. It is easy to imagine the excitement that the cosmonauts felt at those moments as they were getting ready to open a new chapter in the history of human progress – but a malfunction in the automatic docking system caused a gap of 90mm – about a third of an inch – prevented the two crafts from being perfectly linked. That tiny space was enough to keep the cosmonauts from opening the connecting door, and after five hours of repeated attempts to solve the problem, the cosmonauts had no choice but to give up.

Another crew that later took off eventually managed to open the stubborn door – but Salyut‘s story illustrates the enormous technical challenge of launching and operating a space station. Outer space, with its extreme vacuum and temperature, is so hostile to humans and human technology that even a simple operation like opening a door is not trivial at all.

Salyot’s story gives us a point of reference when we tell the story of the Mir space station, the home of dozens of astronauts for fifteen consecutive years. Mir survived not only harsh environmental conditions and hair-raising accidents but also extreme political and social changes on Earth.

A Third Generation Space Station

Salyut 1 was a so-called ‘first-generation space station’: it was launched with all the supplies and equipment it needed, and when the equipment ran out, the space station ended its life. Salyut 6 and 7 were second-generation space stations. They had two anchorage points: one for the spaceship that brought back the cosmonauts, the other for unmanned spacecraft that brought in a steady supply of equipment, water, and food. This supply allowed the space station to extend its usefulness significantly.

The Americans also had their own space station: SkyLab. SkyLab was launched in 1973 and was, on paper, technologically equivalent to Salyut 6 and 7. Unfortunately, from the very early days, Skylab suffered many technical faults in its power supply and its docking points, and the Americans, the winners of the race to the moon, found themselves lagging behind the Soviet Union when it came to long space stays.

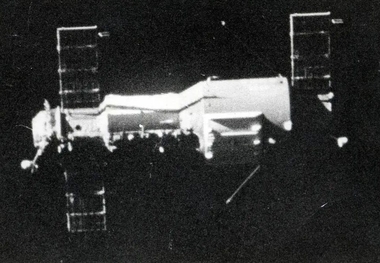

Mir was a technology leapfrog: it was planned in advance to be modular – that is, it could be expanded gradually through modules that would be launched separately from each other. This approach has many advantages: for example, each module could be optimally designed to fulfill a specific task such as scientific experiments, communication, energy supply, and so on. Another advantage is that each module can be launched separately instead of the entire station at once, so the launch rocket can be relatively small and cheap. Towards the end of its life, Mir was made up of seven different modules that were connected at odd angles to each other and looked a bit like a toy created up by a very incompetent child, or a creative, thought-provoking child if it was one of my children, of course.

Mir’s first module, called the Base Block, left Earth in 1986. Two additional modules joined it in 1989 and 1990. Launching a space station for orbit around the globe is a costly business: Mir cost the Russians over $ 4 billion. Why did the Soviet Union invest so much money in a space station instead of, say, launching a bunch of regular spacecraft, like those that stay in space for a few days and return to Earth?

Part of the answer is, as you might imagine, national patriotism. The Soviet Union was very proud of its advanced space station and invited many countries – especially its allies, of course – to send their own cosmonauts to Mir. For example, Syria and Afghanistan sent their first cosmonauts to Mir in 1987 and 1998.

From a more practical angle, a space station allows us to perform scientific experiments that can not be performed on Earth, or even with a spaceship.

Bone Mass Loss in Free Fall

‘Free Fall’ is the sense of the lack of gravity that is created when we fall in acceleration equal to the acceleration of gravity exerted by the Earth. Almost all of us have experienced, on some occasion, the feeling of ‘freefall’: for example, while jumping down from a high place or when riding a roller coaster. Many phenomena – from the behavior of animals and plants to chemical processes – occur quite differently under free fall conditions than in normal gravity, and studies in this field may have interesting and unpredictable consequences.

Most of the physiological changes that occur in our body due to a long stay in free fall are reversible, and after returning to Earth, the body will return to its original state after a few days of good rest. One exception, in this respect, is the loss of bone mass that can cause long-term damage and can even be life-threatening.

We are used to thinking of our bones as permanent and unchanging, but not so. There are two types of bone cells: the first type, osteoblasts, build the bone constantly, and the other type – osteoclasts – breaks it down. The two types of cells work in unison so a stable equilibrium is created and bone mass remains constant. In free fall, however, this balance is disturbed: for some unknown reason, the cells that build the bone slow down, while the cells that break the bone continue to do so at the same rate. The result is a gradual loss of bone mass, similar to osteoporosis, which is common in older people. But while an adult loses one and a half percent of his bone mass per year, an astronaut in free fall loses a percent and a half … a month.

In short space flights of a few weeks, loss of bone mass is not a serious problem, and the body returns to normal after returning to Earth. Flight to other planets, as mentioned, may take months or even years. When the astronauts reach their destination, their bones may be so brittle and fragile that every blow can cause cracks and dangerous fractures and prevent them from performing the task for which they were sent. In other words, finding a way to prevent or slow the loss of bone mass is a necessary step on the way to conquering space.

in some cases, The cosmonauts of Mir spent long months in space. Cosmonaut Valery Polyakov, for example, holds the current world record for space stay: 437 consecutive days in Mir. The long stay under free fall allowed scientists to examine different techniques for coping with a loss of bone mass and muscle weakness. For example, the cosmonauts wore special elastic suits, took supplements and experimental drugs, and exercised for two to four hours each day on bicycle and running equipment tied down with bungee cords. Exercises, in particular, had a positive effect on muscle strength, but not on the loss of bone mass. Research on this subject continues today on astronauts on the International Space Station, but no solution to the problem has been found. The researchers hope that a breakthrough if there is one, will also help osteoporosis patients on Earth.

The Last Citizens of the Soviet Union

More than a hundred cosmonauts have visited Mir during its 15 years, but it is likely that the most famous is Sergei Krikalev. Krikalev began his career as a mechanical engineer in the Soviet space industry, but in 1985 he was chosen to be a cosmonaut. He visited Mir for the first time in 1988 and spent nearly six months there. In 1991, Mir approached the end of its planned life span of five years, but in reality, its technical condition was good enough to continue operations. Krikalev volunteered for another mission, and in May 1991 he took off again to the space station.

When Krikalev left Soviet soil, the mighty Soviet empire was already in the throes of severe internal shocks. Mikhail Gorbachev’s political power was diminishing, Boris Yeltsin swept public opinion, and in the Baltic republics, more and more voices were calling for rebellion against the central government. Krikalev was due to return to Earth five months later, in October 1991, but pressure exerted by Kazakhstan on the Russian space agency led to a change in plans. The Kazakhs demanded that a cosmonaut of Kazakh origin be sent to the space station, and were unwilling to compromise. Since all the takeoffs and landings were from Kazakhstan, the Russians had no choice: They replaced one of the cosmonauts of Soyuz 13, the spacecraft that was about to take off for Mir, with a Kazakh cosmonaut. Unfortunately, the Kazahi did not have enough training for a long stay at a space station, and Krikalev was asked to remain in Mir for another period, accompanied by another cosmonaut named Alexander Volkov. It is interesting to note that someone in the space agency forgot to update the military bureaucracy of this development: Krikalev was supposed to serve in the military reserves, and was almost issued a warrant for desertion – before the army realized that their reserve soldier was not even on Earth.

Two months later, on December 26, the Soviet Union disintegrated. Many in the West feared that, in the chaos and disorder of the disintegrating empire, Mir would be forgotten and abandoned. The cosmonauts are totally dependent on a steady supply of water, air, and food through another spacecraft, and it was hard to believe that anyone in Russia could arrange a launch of a supply ship in the current situation. The very fact that Krikalev remained in Mir instead of returning to Earth intensified the feeling that he and his colleague had been abandoned in the orbit around the earth.

In practice, however, Krikalev and Volkov were not abandoned and were never in danger. The Russian space agency continued to function in an admirable manner, and the plans for the replacement crew were not stopped. In addition, Mir always had a ‘lifeboat’: a Soyuz spacecraft that was anchored to the space station and with which cosmonauts could return to earth as soon as they wanted to. Krikalev later said that he was following the events on the ground very closely, but he was never afraid for his life.

Anyway, In March 1992, the spacecraft and the replacement crew were launched, and Krikalev and Volkov returned to Earth. The world to which they returned was very different from the one they had left … The Soviet Union disappeared, Kazakhstan became an independent state, and even Krikalev’s birth town, Leningrad, changed its name back to St. Petersburg. Krikalev and Volkov still wore the Soviet Union’s insignia on their uniforms and were carrying their Communist Party membership cards in the pockets of their suits. They entered history as “the last citizens of the Soviet Union.”

Sergei Krikalev flew to space four more times and holds the title of ‘the human who’s spent the most time in space’: 803 days on six different flights. Mir continued to be manned continuously and received supplies on an ongoing basis. But even though it was hovering 300 km (186 mi) above the ground, Mir did not evade the consequences of the political earthquake occurring below it. The political upheaval on the ground had very practical implications for the operation of the station – as well as its level of maintenance.

A New Kind of Peace

The breakup of the Soviet Union opened a window of opportunity for interesting cooperation between NASA and the Russian space agency. The Americans wanted to gain experience in a long stay in space, in preparation for the establishment of the International Space Station, which was supposed to take off at the end of the 1990s. The Russians, on the other hand, desperately needed funding. The Russian Mir space station, which was launched in 1986, met the needs of both sides.

In 1992 President George H.W. Bush and Boris Yeltsin signed a cooperation agreement: American astronauts would join cosmonauts on Mir, in exchange for several hundred million dollars to be transferred to the Russian space agency.

The new cooperation was a great success. Space shuttles were launched to Mir, and American funding helped Russians launch four new modules into space and significantly improved the space station. For those who grew up in the Cold War era, the images of an American space shuttle hovering over the Earth alongside a Russian space station were amazing and full of hope, demonstrating better than any diplomatic treaty the change in relations between the two powers.

Mold And Fungus

But time can not be stopped, and the passing years began to make their mark on the aging space station: the solar panels that supplied the electrical energy gradually lost their efficiency, and random mishaps occurred on the various computers and devices. Moreover, Mir was like a small, crowded ship that had not entered a port and had not been thoroughly cleaned for over a decade. I’ve been on ships in my time: trust me, you don’t want to live in such a place.

For example – Jerry Linenger arrived in Mir in January 1997. Linenger was the fourth American astronaut on the space station and joined two cosmonauts who had been in space for several months. In interviews and in his book, Linenger described the smell of the air in Mir: the stink of mold and fungus. No one was surprised. When we talk about the dangers and challenges associated with life on a space station, we usually think about the harmful effects of cosmic radiation or the loss of bone mass we discussed in the previous episode – but not many are aware of the dangers of a fungal infection. The moss and mold the cosmonauts brought with them from Earth found a home in hidden corners, behind tubes and electronic panels, and grew unchecked for years. The tiny mushrooms penetrated tiny cracks and widened them, feeding on plastic and weakening metals. Since the station could not be ventilated or the equipment dismantled and cleaned thoroughly, the accumulated damage from fungi could be significant. Linenger, a doctor by training, examined the health of the team and saw no evidence of medical damage from fungal infections, but admitted there were dark corners on the space station that no one was willing to put their hands into…

The Fire on Mir

In February 1997, a few weeks after Linenger’s arrival in Mir, the spacecraft Soyuz 25 docked at the space station. Three cosmonauts, two of them Alexander Lazutkin and Vasily Tsibliyev, were supposed to replace the current cosmonauts and remain with Jerry Linenger for several months. February 24 was a Russian holiday, and the six cosmonauts celebrated with a rich dinner that included fairly rare dishes at the space station, such as caviar, hot dogs, and cheese. They sang, played guitar, told jokes, and spent a particularly enjoyable evening.

Mir was designed to accommodate three cosmonauts on a regular basis, and its oxygen recycling facilities were able to supply oxygen to only three people. Six laughing and singing cosmonauts were unusually heavy on the usual supply of oxygen, and at some point, the cosmonauts felt that the oxygen levels in the air were beginning to fall. This was not an unusual occurrence for Mir during periods of team exchange, and just for that purpose, a backup generator was installed at the station to produce more oxygen. Oxygen production is done by inserting a kind of chemical ‘cassette’ into a special furnace: heating the compound to a temperature of about 700 degrees Celsius (or 1300 f) resulted in the dissolution and release of the oxygen atoms in it.

Cosmonaut Alexander Lazutkin left the dinner table and floated into the corridor where the backup generator was installed. He took a new cassette from the closet, put it in the furnace and turned the handle. It was a perfectly routine operation, performed at least two thousand times, if not more, over the years. But when he turned to return to the dinner table, he heard an unfamiliar whisper behind him. He turned around and froze. A huge jet of flames, a small volcano as he later described it, burst out of the generator. Liquid, boiling metal was pouring on the opposite wall of the corridor.

A fire inside a closed building like a space station is much more dangerous than a similar fire in a closed building on Earth, as the thick smoke and the hot air have nowhere to go to, and the flames can consume all the oxygen in the air within minutes. Given the dangerous potential of a fire inside the space station, you’d expect the astronauts to be well trained in dealing with fires, right? They weren’t. Part of the reason for this was the poor design of the fire extinguishers installed on the walls of the station, which prevented the cosmonauts from using them for practice: the extinguishers were designed so that the moment they were removed from their place, a chemical process was started that made them useless three months later.

Predictably, the lack of readiness was at the disadvantage of the cosmonauts in these fateful moments. Lazutkin tried to extinguish the fire with a wet towel, but within seconds the entire corridor was enveloped in thick smoke. The rest of the cosmonauts struggled to wear their smoke masks, some of which were faulty. Some of the fire extinguishers that were secured to the walls could not be released. The flames, which were about one and a half feet tall–or half a meter–, were lapping the walls of the station; if the metal melted and a hole in the wall would form, the fire would become the least of the concerns of the cosmonauts…

And, unfortunately, the fire broke out in the corridor leading to one of the two escape spaceships, which prevented their access to it. That meant that at best only three cosmonauts could escape back to Earth, and three would remain at the space station.

Fortunately, the cosmonauts managed to open three fire extinguishers and were able to take control of the flames before they spread to the rest of the station. Thick smoke filled the station for hours until the air circulation system was able to filter it completely.

The Russian space agency received the reports of what happened in Mir, but outwardly broadcast an atmosphere of “business as usual”: In the reports that were transmitted to NASA about the incident, the fire was described as a small fire that lasted a few dozen seconds. Only when Jerry Linenger returned to Earth and told his story – did his managers in NASA understand how close the space station was to a terrifying disaster… Under the pressure of the Americans, a comprehensive investigation was conducted and the cause of the failure in the oxygen generator was discovered. The workers in the manufacturing plant used flexible latex gloves to protect their hands from the chemical compound of the cassettes: a piece of latex found its way into one of the cassettes, and during heating, it disrupted the chemical reaction and made it unstable. New safety and quality assurance procedures were introduced into the production plant, and NASA hoped that Mir’s troubles were over. They were wrong.

Mir’s Collision with Progress

A few months passed. Jerry Linenger left Mir and was replaced by astronaut Michael Foale. With Foale were Lazutkin and Vasily Tsibliyev, the cosmonauts who were there during the fire incident. In the 1990s, Russia’s economic situation was extremely difficult, and the money the Americans poured in was not enough to solve the budget problems of the Russian space agency.

The Progress cargo ship was an unmanned spaceship that every few months docked at Mir and brought supplies from Earth. Progress was equipped with an automatic system that allowed it to dock with the space station autonomously – but this system was manufactured in Ukraine, and the Ukrainians demanded a lot of money for it. The Russian space agency switched from the autonomous system to a manual anchorage system called TORU, a cheaper system. TORU enabled cosmonauts on the space station to control the spacecraft via remote control while it was approaching them: a video camera on the spaceship broadcast images to a screen in the space station, and the operator navigated the spacecraft using joysticks, like a video game.

In early June 1995, a spacecraft docked at the space station. As always, the cosmonauts took out the equipment it had brought and filled it with the garbage that had accumulated in the station in recent months. This time, the control center decided to take advantage of the opportunity to test the TORU manual docking system before Progress returned to Earth. They ordered Tsibliyev, the station’s commander, to disengage Progress from Mir, fly it by remote control for several kilometers, turn it back and then re-dock it at the space station.

Tsibliyev was not at all enthusiastic about the idea. A few months earlier he tried to perform the same exercise, but encountered many difficulties: a technical malfunction hit the video camera on the spaceship, and in combination with his inexperience in operating the manual system, the failure caused the spaceship to miss the space station and fail in anchoring. Tsibliyev believed that the dangerous exercise should not be carried out without comprehensive preparation, but perhaps because of the rigid hierarchical nature or character of the Soviet military system, he didn’t speak up to his superiors.

The exercise began on June 25. Tsibliyev grabbed the steering handles of the TORU system and flew Progress away from the space station. Then he turned it on its axis and began to fly it back toward the station. This time the Progress video camera was working properly, but the images it passed to the screen in front of Tsibliyev were almost useless. Progress photographed Mir from above, against the background of the earth – and Tsibliyev could not see the space station against the white clouds below it. He asked Lazutkin to look out the windows and report to him the location of the approaching supply craft.

In the absence of a reasonable video image, Tsibliyev incorrectly assessed the speed of Progress’s approach. Lazutkin shouted to him that the ship was approaching too fast. Tsibliyev used Progress’s braking rockets, but these had little effect. Lazutkin told Michael Foale, the American astronaut, to prepare the lifeboat, and looked back out the window at the approaching spaceship.

“I watched the black, speckled body of the spaceship pass under me, bent down to look closer, and at that moment there was a tremendous thump, and the whole station shock.”

Progress hit one of Mir’s solar panels, then rebounded and hit the station itself. The impact point was in a module called Specter that contained electrical systems that provided about half of the power supply to the space station. A few seconds after the strike, the crew heard the sound no cosmonaut ever wanted to hear: the low air pressure alarm. But even without the alarms, the cosmonauts immediately realized that they were in trouble; they could feel the pressure of the air falling, and the reason was clear: a hole had opened in the side of the station.

Now the cosmonauts were racing against the clock; they had to discover the source of the leak and block it in a matter of minutes. If they failed, they would have to rush to the lifeboat and hope they could escape the station on time.

Lazutkin and Foale identified the impact point on the Specter module and decided to close the opening leading to it and disconnect the module from the rest of the station. Unfortunately, eighteen power cables passed through the opening and prevented them from closing the door. Having no choice, they decided to cut the cables on the spot, without even cutting off the electricity. Under a shower of dangerous sparks, the two cut the cables and managed to close the door to Specter before the air ran out. The initial emergency was successfully dealt with, but this was only the beginning. The impact of Progress on Mir put the space station in an uncontrollable spin that meant that even the solar panels that were not damaged in the collision were no longer directed at the sun, and did not supply electricity to the station. The backup batteries lasted only half an hour and were then depleted.

A space station is, in principle, a noisy place: at any given moment, the station has numerous devices, air conditioners, and installations buzzing and beeping. But now, without electricity, Mir was quiet … quiet as a cemetery. Michael Foale described it this way, quote:

“Within twenty to thirty minutes of the collision, every piece of equipment at the station stopped working, the station was completely quiet. It was a very strange feeling, to be in a space station with no sound but your own breathing. And then it started to be cold, very cold.”

Unable to communicate with Earth’s control room, the cosmonauts had to find a way to stop Mir’s spin and direct the solar panels back at the sun. At their disposal was the Soyuz spacecraft, which was anchored to the station, and with which it was possible to influence the speed of rotation, but the amount of fuel in the rockets was very limited, and if they wasted all of it, they could not abandon Mir and return to Earth if necessary. Without computers or navigation devices, the cosmonauts did not know when to activate the rockets and for how long.

Having no other choice, Michael Foale and his colleagues returned to the basics. Like the sailors who sailed the sea thousands of years ago, the cosmonauts peeked through the station windows to the stars, trying to calculate the spin velocity with basic high school math. After several erroneous rocket activations, they finally managed to stop the spin enough to allow the panels to absorb a few minutes of sunlight once every hour and a half.

With the partial power supply, the cosmonauts continued to restore Mir back to health piece by piece. The next step, after regaining communications with the control room on the ground, was to renew the supply of electricity to a module called Kvant 2, because that was where the toilets were… After thirty exhausting and taxing hours, Mir returned to life. The Specter module, however, remained closed and disconnected from the rest of the station for good, and the cosmonauts later went on several spacewalks within the punctured module to retrieve equipment from it and examine the damage.

The Flight controllers at the Russian space center accused Vasily Tsibliyev of being responsible for the accident and even fined him and reduced his wages. They claimed that Tsibliyev had performed the Progress docking exercise with carelessness, acted out of order, and skipped vital tests. The cosmonauts, for their part, were convinced that the negligence was on the side of the ground crews; the order to perform the exercise was made without Tsibliyev properly practicing the manual steering system, and without anyone figuring out the best maneuver for the docking.

A New Threat for Mir

No matter whose fault the collision was, this dangerous accident marked the beginning of the end for Mir, a space station that was over ten years old at that time. Beyond the ever-increasing danger posed to cosmonauts in the aging space station, a new threat to Mir’s safety appeared in the form of the International Space Station. The International Space Station was a joint venture of space agencies from several countries, including the United States and Russia, each of which was supposed to contribute its own budget to the project. Russia, a country that was already in a difficult financial situation, simply did not have enough money to finance two space stations.

In 1998, the Russian space agency announced that Mir would return to Earth in June 1999. In Russia, many citizens still regarded Mir as a source of national pride: they tried to prevent the decree and even raised public funds to continue its operation. The result was a $15,000 donation, barely enough to fund the station for two or three days.

Another hope for Mir’s salvation came in the form of a 51-year-old British businessman named Peter Llewellyn, who promised to pay $100 million for a week-long visit to the space station. The Russian space agency had almost begun to count the money when investigative reports in the Western press poured cold water the whole thing: Llewellyn, the papers claimed, was a professional con artist who regularly made grandiose promises and deceives his business associates. These claims were not officially sanctioned, as far as we know, but the fact is that Llewellyn did not manage to raise even $10 million in advance payments, and this deal was never carried out. In August 1999 the last team left Mir and returned to Earth. For the first time in over ten years, the station was unmanned.

But just when everything was almost done, and Mir had one solar panel in the grave, as the cliche goes, an unexpected hero suddenly appeared to save it. Walter Anderson was an American businessman who made his fortune in the communications sector and in the 1980s decided to devote his time and energy to the development of private space initiatives. At that time, we should remember, space exploration was the exclusive domain of government space agencies, and for good reason: launching a manned spacecraft was so expensive that it was hard to imagine a scenario in which a commercial company could make the necessary investment, let alone profit. Nevertheless, Anderson worked tirelessly to advance his vision and at the end of the 1980s financed the establishment of the University of Space Studies, which is still active today in central France, with branches in many countries around the world.

In 1999, Anderson and a partner set up a company called MirCorp to do something that was never done before: to operate Mir as a private and commercial space station, independent of governments and countries. Mircorp was jointly owned by Anderson and his partners, and by RSC Energia, a Russian spacecraft manufacturer. Energia was responsible for station maintenance and operation, and Anderson and his staff would manage the business and commercial side of the activity.

Anderson did a great job, and within a few months made his first successful transaction: Dennis Tito, an American businessman, wanted to be the first tourist in space and was willing to pay several million dollars for this goal. Another agreement was signed with NBC, the television network, which designed a reality show called ‘Destination: Mir’. As you can guess, ‘Destination: Mir’ was to be a reality TV show, where participants go through trials, and if they fail, they are kicked out. In this case, the group of volunteers was supposed to undergo strenuous astronaut training, at the end of which one of them would fly to Mir.

When Walter Anderson paid the Russian space agency an advance of seven million dollars, the Russians understood that he really meant what he was saying – and went into action. Mir was already in a low orbit, preparing for her return to Earth- and an unmanned spacecraft sent to Mir pushed it to a higher orbit. In April 2000 a historical milestone was achieved: two astronauts hired by MirCorp flew to the Russian Mir spacecraft, the first time that a private company had sent astronauts into space. The two stayed in Mir for seventy-three days, fixed problems, and prepared it for its future as a television studio and a hotel for wealthy tourists.

But not everyone was happy with this success. Walter Anderson’s supporters claimed that NASA has begun to exert enormous political pressure on the Russian space agency to sever its ties with MirCorp and stop commercial space cooperation, but we have not found any official support for these claims. However, according to various press reports, NASA was very much worried that the Russians were so eager to make money from private space initiatives that they would abandon their commitment to the International Space Station.

Conspiracy theory or not, MirCorp failed. None of the business ventures eventually took off, even though Dennis Tito did take off a year later to the International Space Station, fulfilling his dream of being the first tourist in space. A few years later Walter Anderson was arrested and charged with the largest tax evasion ever in the history of the United States and was sentenced to eight years in prison. MirCorp closed its doors in 2003. Without funding and without a future, Mir was a dead-spaceship-walking, and its fate was sealed.

How To Kill a Space Station?

So how do you ‘kill’ a space station? In principle, the matter is simple: allow it to fall into the atmosphere and let the friction with the air burn it and break it up into tiny particles. But when it comes to a big, heavy object like a space station, nothing is so simple.

SkyLab, the US space station, is a representative example of this. SkyLab was as big as two buses and weighed more than seventy tons, and NASA engineers feared that the friction with the atmosphere would not be enough to break it up — so it would crash on Earth still intact. It was also impossible to predict with certainty where on earth the space station would fall. This combination of factors led many to fear that Skylab would crash in a densely populated urban area. The chances of this were very slim – less than one in a thousand – but as always in such cases, the media fed the flames and covered the story in detail. In the end, when SkyLab returned to Earth on June 11, 1979, it was only partially dismantled: large parts of it, like a huge oxygen tank, survived the burn in the atmosphere and crashed in Australia, not far from a small town called Esperance. No one was hurt, but the Australians decided to fine NASA $400 for dumping waste in a public area. NASA ignored the fine, but in 2009 a local DJ in California organized a fundraising campaign from his listeners, sent a check to the Australian town and finally closed the twenty-year ordeal.

The Russian engineers responsible for the destruction of Mir directed the space station to crash in the Pacific, far from any human habitation. Nevertheless, the American fast food company Taco Bell tried to hitch a ride on public concerns and launched an original marketing campaign. Taco Bell placed a huge sheet of plastic with a bullseye mark about ten miles from the Australian coast. If a part of the crashing space station hit the target, Taco Bell promised that every American citizen would get a free taco.

The managers of Taco Bell were not stupid and knew that the chances of such a thing actually happening were negligible – but just to be on the safe side, they bought insurance. In the end, it was not needed. In March 2001, Mir burned in the atmosphere almost perfectly, and only a small piece of it was eventually detected in the Boston area of the northeastern United States. The story of the Mir space station was finally over.

An Engineering Marvel

When reading about Mir’s accidents and mishaps over the years, it is easy to mistake it for a failure. Nothing is further from the truth. The mere fact that Mir had been in space for fifteen consecutive years and was continuously manned for almost that entire period was a tremendous technological achievement, a success comparable to the American landing on the moon. This impressive success was achieved by the Soviet Union alone, using outdated technology such as analog computers and spacecrafts from the seventies. The Russians have a good reason to be proud of Mir.

Mir’s history is fascinating as it reflects the enormous social and technological transformations that took place on Earth at the end of the twentieth century. Mir began its life as part of the Cold War between the Soviet Union and the United States. It was built and maintained by a state in the ‘classical’ model of space exploration since its inception. Fifteen years later, it was the common home of Russian & American cosmonauts who ate, drank, and played the guitar together – that is, when they were not busy putting out fires and generally trying to survive. The last team to visit the station was a civilian team that marked the beginning of the era we are in today: an era when private companies are sending spacecraft to the International Space Station, and teams from around the world are planning to land robots on the moon.

Two space stations are currently orbiting the Earth – the International Space Station and the Tianjong 1 space station, which were designed to one degree or another with the lessons learned during Mir’s long years in space. Whether the first to reach Mars would be an American astronaut, a Russian cosmonaut or a Chinese taikonaut, he or she would probably have a good reason to thank the space station and the brave cosmonauts who lived in it.

Music Credits

https://soundcloud.com/stevenobrien/foreboding-ambience

https://soundcloud.com/jordan-craige/space

https://soundcloud.com/tonspender/unbalanced-trip

https://soundcloud.com/mideion/insomnia.

https://soundcloud.com/nihili-christi/tumore-spirituale

https://soundcloud.com/rhetoric-1/film-noir-restored

Sources And Bibliography

http://news.bbc.co.uk/2/hi/americas/1231447.stm

http://history.nasa.gov/SP-4225/multimedia/progress-collision.htm

http://plus.maths.org/content/right-spin-how-fly-broken-space-craft

http://old.post-gazette.com/regionstate/19990430scam3.asp

http://youtu.be/uwuzX38x_Ro

http://news.bbc.co.uk/2/hi/americas/1231447.stm

http://web.archive.org/web/20090406003700/http://www.astronautix.com/craft/mirmplex.htm

http://www.space.com/21122-skylab-space-station-remains-museum.html

http://www.space.com/19607-skylab.html

http://www.scientificamerican.com/article.cfm?id=how-does-spending-prolong

http://www.nsbri.org/humanphysspace/introduction/intro-bodychanges.html

http://science1.nasa.gov/science-news/science-at-nasa/2001/ast01oct_1/

http://suzymchale.com/krikalyov/mir09.html

http://www.nytimes.com/1992/03/26/world/after-313-days-in-space-it-s-a-trip-to-a-new-world.html?pagewanted=2&src=pm

http://articles.latimes.com/1992-03-21/news/mn-4000_1_soviet-union

http://www.mathematica-journal.com/issue/v7i3/special/transcript/html/

http://www.mircorp.org/corporate.html

http://books.google.co.il/books?id=DrgvjPsfwhsC&lpg=PA66&ots=sreC_8gQug&dq=mircorp%20walt%20anderson&pg=PA79#v=onepage&q&f=false

http://rense.com/general8/mir.htm

http://history.nasa.gov/SP-4225/nasa4/nasa4.htm

http://nsc.nasa.gov/SFCS/SystemFailureCaseStudy/Details/81

http://www.universetoday.com/100229/fire-how-the-mir-incident-changed-space-station-safety/

http://www.astronautix.com/details/soy51799.htm#more

http://history.nasa.gov/SP-4225/science/science.htm

http://home.comcast.net/~rusaerog/mir/Mir_exp.html#BIOLOGY

In the early 1980’s

In the early 1980’s