Software errors and random bugs are rather common: We’ve all seen the infamous Windows “blue screen of death”… But is there really nothing we can do about it? Are these errors – from small bugs to catastrophic mistakes – inevitable? In this episode, we’ll tell the story of FORTRAN, the groundbreaking high-level computer language, and the sad, sad tale of the Denver Airport Baggage Disaster. Don’t laugh, it’s a serious matter.

Download mp3

Subscribe: iTunes | Android App | RSS link | Facebook | Twitter

Are Software Bugs Inevitable?

Written By: Ran Levi

Part I

A car that breaks once every few weeks is simply unacceptable. We expect our cars to have a certain level of reliability. But when it comes to computers – software errors and random bugs are rather common. We’ve all seen the infamous Windows “blue screen of death”, and most smartphones require a reboot every once in awhile.

In a way, we’ve learned to live with software errors and take them for granted. But is there really nothing we can do about it? Are these errors – from small bugs to catastrophic mistakes – inevitable, or is there hope that as technology and innovation move forward, we’ll be able to overcome this annoying problem – and make software bugs a thing of the past? This will be the main question of this episode.

How Software Works

Software bugs are nothing new, of course. Writing computer software in the 1940s and 50s was a complicated and difficult task, so it’s no wonder that the first generation of computer programmers considered bugs and errors inevitable.

But let’s start from the basics, with a quick refresher on how computer software works. A computer is a pretty complex system, and its two main parts are the processor and the memory. The memory cells contain numbers; the processor’s role is to read a number from the memory, apply some sort of a mathematical operation on it such as adding or subtracting and then write the result back to the memory.

A software is a sequence of commands telling the processor where certain information is located in the memory and what needs to be done with it. Think of the information in a computer’s memory as food ingredients: the software is the recipe. It tells us what we need to do with the different ingredients at every given moment. A software error is an error in the sequence of commands. It might be a missing command, two commands given in the wrong order, or an altogether wrong command.

Just Plain Numbers

So why were software bugs seen as a fact of life back in the 1950s? Back then, both information and commands were given not as words – bus as plain numbers. For example, the numeral forty-two might represent the command “copy,” so that the sequence 42-11-56 might represent an action like: “copy the content of memory cell eleven to memory cell fifty-six.” Even a mildly complicated calculation, like solving a mathematical equation, might require hundreds – if not thousands – of such command sequences. Each such sequence had to be perfectly correct, or else the entire calculation might fail – just like in baking: if we put the frosting on the cake before baking the batter, that cake will be a disaster.

But software is even less forgiving than baking. You can’t even make a small mistake because then the entire equation will fail. It’s like a house of cards. It’s no wonder then that at the time, only computer fanatics were willing to devote their time to programming. It was a truly Sisyphean task.

Assembly

In the late 1940s, a new computer language was developed. It was called “Assembly”, also known as “machine language.” Assembly replaced some of the numbers with meaningful words that were somewhat easier to remember. For example, the number 42 was replaced by the word MOV. These textual commands were then fed to a special software named the “Assembler” that converted the words back to numbers – since that’s ultimately what computers understand.

For programmers, Assembly represented a real improvement: for humans, words are much easier to work with than random numbers. But Assembly didn’t eliminate software bugs. It was still too “Low Level” – meaning even simple calculations still required thousands upon thousands of code lines. Programming was still an exhausting and Sisyphean task.

John Backus

One of those exhausted programmers was John Backus. Backus was a mathematician who worked for IBM, calculating the trajectories of rockets. He absolutely hated programming in Assembly and the tedious process of finding and eliminating software bugs.

So in 1953 Backus decided to do something about it. He wrote a memo to his supervisors and suggested that IBM should develop a new software language that will replace Assembly. This new language, wrote Backus, will be a “High-Level Programming Language”: each individual command will represent numerous Assembly commands. For example, you could use the command “Print” – and “behind the scenes” it will evoke hundreds of simpler Assembly commands that will handle the actual process of printing a character on the screen or on a page. This level of abstraction will make programming easier, simpler – and hopefully, a lot less prone to errors.

But if a High-Level programming language was such a great idea, how come no one thought about it before? Well, it turns out that Backus wasn’t the first. In fact, similar computer languages were developed as early as the 1940s, and many computer scientists found them to be a fascinating research subject. In reality, though, none of these early high-level languages threatened Assembly’s dominance. Why is that?

Recall that the computer, as a rule, understands nothing but numbers. Much like Assembly had to have an “Assembler” to translate the textual commands to numbers – A high-level programming language needs a special software called a “Compiler” to translate the high-level commands. Unfortunately, the translated code produced by the early compilers was very inefficient, when compared to code written in Assembly by a human programmer. The compiler could create ten lines of code – where a human, with a bit of creative thinking, could accomplish the same task with a single line.

This inefficiency meant that software written in high-level code tended to be slow. Since in the 1940s and 50s, computers were already weak and slow, this hit in computation performance was a penalty that no one was willing to pay. And so, high-level programming languages remained an unfulfilled promise.

A High-Level Language

Backus was aware of the challenge, but he was determined. In his memo to IBM, he stressed the potential financial benefits: programming in a high-level language could reduce the number of bugs in a software project, shorten development time, and reduce costs by as much as seventy-five percent.

Backus made a convincing argument, and IBM’s CEO approved his idea. Backus was made the head of the development team and recruited talented and enthusiastic engineers who welcomed the challenge of creating this high-level language. They worked days and nights, and often they slept at a hotel near the offices in order to get available computer time even before sunrise.

Their main task was writing the compiler: the software that translated the high-level code to low-level machine language. Backus and his people knew that the success of their project depended on the compiler’s efficiency: if the code it produced was too inefficient, their new language would fail just as its predecessors did.

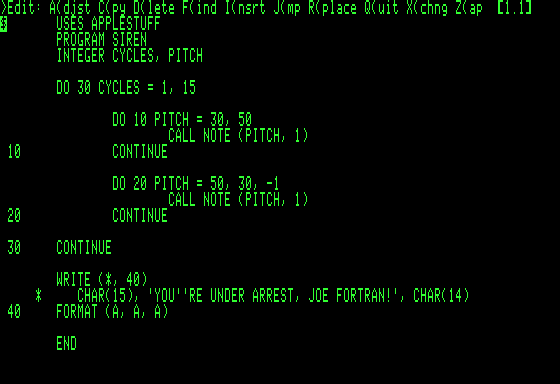

Four years later, in 1957, the first version of the new high-level programming language was ready. Backus named it FORTRAN, short for Formula Translation. The name reflects on what is was intended to be used for scientific and mathematical calculations. IBM had just launched a new computer model named IBM-704, and Backus sent the new compiler and a detailed FORTRAN manual to all customers who bought the new computer.

FORTRAN Takes The World By Storm

The response was overwhelmingly positive. Programmers were delighted by how easy and fast they could write software using FORTRAN! One customer recalled how shocked he was to see one of his colleagues – a physicist in a nuclear research institute – writing a complicated piece of software in a single afternoon, whereas writing the same code in Assembly would have taken several weeks!

FORTRAN took the software world by storm! Within less than a year, almost half of the IBM-704 programmers were using FORTRAN on a daily basis. It was the salvation that all bleary-eyed programmers had prayed for: code written in FORTRAN was up to twenty times shorter than one written in Assembly, while still being efficient and blazingly fast. In fact, the new FORTRAN compiler was so successful that it was considered the best of its kind even twenty years later.

This was a big stride towards a future clean from software bugs. Backus’ team was so thrilled by FORTRAN’s success, they hardly included in the language any tools for detecting software errors and analyzing them. They believed that these tools weren’t needed anymore, as the new language had reduced the number of bugs considerably.

FORTRAN Is Made A Standard

In 1961, the American National Standards Institute decided to make FORTRAN a standard language. This was a big deal since until then FORTRAN only worked with IBM-704 computers: making it a national standard meant that it could now be used on computers from other manufacturers as well. Programming became easy and fun! It was no longer restricted to hardcore mathematicians and engineers. Amateur programmers taught themselves FORTRAN from books. Assembly was almost gone, and FORTRAN had taken over the market.

FORTRAN’s success ushered in a new generation of high-level programming languages like COBOL, ALGOL, ADA, and C – the most successful of them all. These languages not only improved on FORTRAN’s ideas and made programming even easier, but they also made programming suitable to a larger variety of tasks: from accounting to artificial intelligence.

Nowadays, there are hundreds of high-level programming languages to choose from. Some modern languages such as Python or JavaScript are so simple that even children can learn how to use them easily!

You might be surprised to learn that fifty years after it was first released – FORTRAN is still alive and kicking! It’s gone through changes and updates over the years, but is very much still relevant today – and some scientists and researchers still prefer it for complicated calculations. This is an amazing feat for a language designed in an era when programming was done with punched cards!

John Backus passed away in 2007. He was fortunate enough to see how the language he helped create changed the programming world. It transformed programming from a dreadful task – to a sort of positive challenge, even a hobby embraced by millions around the world.

What About The Bugs?!

All’s well that ends well! But Wait a minute! Wait just a minute…are we not forgetting something? What about bugs? Did high-level languages solve the problem of software errors?

No, unfortunately, they didn’t. You see, while high-level languages did make programming a lot easier – they also allowed software to become much more complex. It’s like having the option to buy LEGO blocks instead of fabricating them yourself: you can invest all your time in creating bigger and bigger creations instead of doing everything from scratch. Similarly, high-level languages allow developers to add more features to their programs – and so the advantages of high-level languages were balanced by the ever increasing complexity of the programs themselves. The bugs never went away.

A Fundamental Problem in Software Design

In the 1960s it became clear to many computer programmers that reliability was a fundamental problem in software design. Despite all the great developments, it was still almost impossible to create a piece of software with no errors. I mean, a complex and feature-rich software, not some trivial program.

Worse yet, it seemed that it was getting harder and harder to complete a software development project “successfully.” What do I mean by “successfully”? A successful software project is one whose output is a high-quality, bug-free piece of code, tailored to meet the customer’s specifications, completed within schedule and without budget overruns. This goal was proving to be more elusive with every passing year.

Modern research clearly shows that when it comes to software projects – failure rates are extremely high compared to other engineering projects. A basic software project has a twenty-five percent chance of going over budget, or over its deadline, or producing software that doesn’t meet the customer’s expectations. That’s one out of every four projects! And if the project lasts more than eighteen months, or if the staff is larger than twenty people – the risk of failing becomes more than fifty percent. When it comes to even larger projects that go on for years and involve many teams of programmers, the likelihood of failure is close to a hundred percent. About ten percent of those projects fail so dramatically, that the entire software is thrown away and never used at all. What a waste!

This realization hit many programmers badly. I’m a software developer myself, you know – and us developers take our profession very seriously. We can spend years – and I’m not joking – debating the proper way to capitalize variable names in the code.

Over time, software developers started squinting at other engineering fields, like architecture for example and asked themselves why they couldn’t be more similar. I mean, architects and engineers build tall buildings, long bridges and other structures that are reliable, they don’t go over budget or schedule (well, at least most of the time) and they are built according to spec. Experience has taught software programmers that their projects usually won’t reach the same result.

In 1968 some of the world’s leading software engineers and computer scientists gathered in a NATO convention in Germany, in order to discuss this obvious difficulty of creating successful software. The convention didn’t result in any solution, but it did give the problem a name: “The Software Crisis.”

Building A New Airport

So, what does the software crisis looks like in real life? Here’s an example.

In 1989, the city of Denver, Colorado, announced it was embarking on one of the most ambitious projects of its history: building a new modern airport that would march state tourism and local business towards the twenty-first century.

The jewel in the modern airport’s crown was to be a new and sophisticated baggage transportation system. I mean, baggage transportation is an important part of every modern airport. If a suitcase takes too long to get from point to point – passengers might end up missing their connecting flights. If a suitcase gets lost – that’s a ruined vacation. It’s obvious why the project’s leaders took their new baggage transportation system very seriously.

The company that was chosen to plan the new system was BAE, an experienced company within its field. BAE’s engineers examined the blueprints of the future airport and realized that this was going to be a momentous challenge: Denver’s airport was going to be much larger than was usual for airports, and this meant that transporting a piece of baggage from one end of the airport to the other might take up to 45 minutes! So to solve that problem, BAE designed the most advanced baggage transportation system ever built.

A Breathtaking Design

The plan called for a fully automated system. From the moment an airplane lands, until the moment the luggage is picked up from the carousel – no human hands would touch it. Barcode scanners would identify the suitcase’s destination, and a computerized control system would make sure that an empty cart would be waiting for it at just the right place and time, to transport it as quickly as possible via underground tracks, to the correct terminal. Timing was incredibly important: the carts weren’t even supposed to stop: suitcases were supposed to fall from the one track onto a moving cart at the exact right moment.

Just to give you some perspective on BAE’s breathtaking design: there were 435,000 miles of cables and wires, 25 miles of underground tracks and 10,000 electrical engines to power them. The control system included about 300 computers to supervise the engines and carts. It is no wonder that Denver’s mayor said that the new project was as challenging as, quote, “building the Panama canal.”

The entire city followed the project with interest. The new airport was supposed to open at the end of 1993, but a few weeks before the due date the mayor announced that the opening would be delayed due to some final testing to the baggage transportation system. No one was too surprised: after all, this was a complicated and innovative new system, and it was likely that testing it will take some time.

A breathtaking Failure

But no one was prepared for the embarrassment that took place in March 1994, when the proud mayor invited the media to a celebrated demonstration of the new system.

Instead of an efficient and punctual transportation system, the amazed journalists watched in a mixture of horror and delight at a sort of technological version of Dante’s inferno. Carts that made their way too quickly fell off the tracks and rolled on the floor. Suitcases flew in the air because the carts that were supposed to wait for them never came. Pieces of clothing that fell out of the suitcases got shredded in the engines or tangled in the wheels of passing carts. Suitcases that somehow made their way to an empty cart reached the wrong terminal because barcode scanners failed to identify the stickers on the suitcases. In short – nothing worked properly.

The journalists who witnessed the demonstration didn’t know whether to laugh or cry. If it wasn’t for the project costing the taxpayers close to 200 million dollars, it could have been a like classic Charlie Chaplin comedy. The headlines of the following morning undoubtedly made the mayor cringe.

Behind the scenes, there were desperate attempts to salvage the system. Technicians ran to fix the tracks, but finding a solution at one spot created two other problems elsewhere; Each and every day of delay cost the city of Denver another one million dollars. By the end of 1994, with the airport’s project nowhere near completion – Denver faced the very real possibility of bankruptcy.

The mayor had no choice; after conducting several external tests and emergency discussions, it was decided to dismantle a big chunk of the new automated system. Instead, a traditional more manual system was put in place. The final cost of the airport project, including the cost of the new system, was 375 million dollars – twice the original budget.

In February 1995, almost a year and a half after the original date, the new Denver airport opened to flights, travelers, and suitcases. Only one airline, United, agreed to use the new baggage transportation system, but it too soon gave up. The system experienced so many problems and errors that the monthly maintenance cost was close to a million dollars. In 2005, United Airlines announced it would stop using the automated system, and go back to a manual transportation system.

An Investigation

So what went wrong in Denver? Why was the project’s failure so massive and absolute?

Several investigations highlighted the many factors leading to the failure of the Denver Airport project. Some of these factors included: Management decisions that were too affected by political considerations, changes that were made during construction just to please the airlines and an electricity supply that was constantly interrupted. if all that wasn’t enough, the project’s main architect had died unexpectedly. In short, everything that could have gone wrong – did. Having said that, most of the investigators agreed that the biggest problem of this ambitious project was its software component.

As we mentioned before, the original plan dictated that 3000 carts would travel independently around the airport using the underground tracks. In order for that to happen, about 300 computers were supposed to communicate with each other and make rapid decisions in “real time.”

For example, one of the common scenarios was where an empty cart traveled to a specific location in order to pick up a suitcase. Its route turned out to be quite complicated: the empty cart had to use several tracks, change directions, and perhaps move over or under other carts. Also, a cart’s specific route depended not only on its location but on the location of other carts as well. This meant that if a “traffic jam” occurred for any reason, the software had to recalculate an alternative route. Let’s not forget that we are talking about many thousands of carts, each going to a different destination and each supposed to arrive on time; and that the decisions were supposed to be made by hundreds of computers running simultaneously!

Twenty software programmers worked on the project for two whole years, but the system was so complicated that none of them could have seen the entire picture and truly understand, from a “bird’s eye view,” how the system actually behaved. The final result was a complex and complicated software that was full of errors. Denver’s airport project is a perfect example of the Software Crisis: a large project that went over schedule, over budget, and didn’t reach its goals.

Is There A Solution To The Software Crisis?

Dr. Winston Royce, a leading computer scientist, defined the situation best in 1991 when he said:

“The construction of new software that is both pleasing to the user/buyer and without latent errors is an unexpectedly hard problem. It is perhaps the most difficult problem in engineering today and has been recognized as such for more than 15 years. […]. It has become the longest continuing “crisis” in the engineering world, and it continues unabated.”

So what can we do? Is there a solution to the software crisis? That question will be the focus of our next episode. We will get to know the two methods developed over time to tackle the challenges of creating complicated software: Waterfall and Agile. We’ll also tell the incredible story of a computerized system developed for the FBI at a cost of half a billion dollars… and how it turned out to be a breathtaking failure.

And finally, we will get to know Fred Brooks – a computer scientist who wrote an influential book called “The Mythical Man-Month”. In his book, Brooks asks – Is there a “silver bullet” that will allow us to solve the software crisis?

Read Part II

Credits

Maschinenpart By Jairo Boilla

Song 007 – By tarbolovanolo

Crypto, Industrial Cinematic, Batty Slower – By Kevin MacLeod

Cartoon Record Scratch Sound Effects By Nick Judy

I did not make my comment clear as to the company I was referring to. It is United Airlines. I attempted to support Unimatic programs and found it very difficult due to the fact that the Fortran V library was compromised and no longer provided the

run time diagnostic information that it was originally designed to do. Most of the programmers that support United’s Fortran applications are not assembler language

knowledgeable programmers and therefore when there is a failure it takes them longer

to diagnose and correct the program(s) causing the failure. Although I attempted to make corrections to the library it was not really appreciated by the group that had

absolutely no understanding of what I was doing.

One must remember also that Unimatic was written in Fortran before Univac had

developed a Cobol compiler. That was about forty years or more ago.

Thanks for the enlighting comment, Herb! 🙂

Ran

The largest challenge with United’s maintenance of Fortran is very simple. The

fortran compiler library has been seriously compromised. Personally, I supported the

compiler and library when I worked for Univac/Unisys. The library needs to be corrected

and by doing this it will enable new programmers to more successfully support the fortran programs with diagnostics that would be generated by the corrected library

elements(programs)